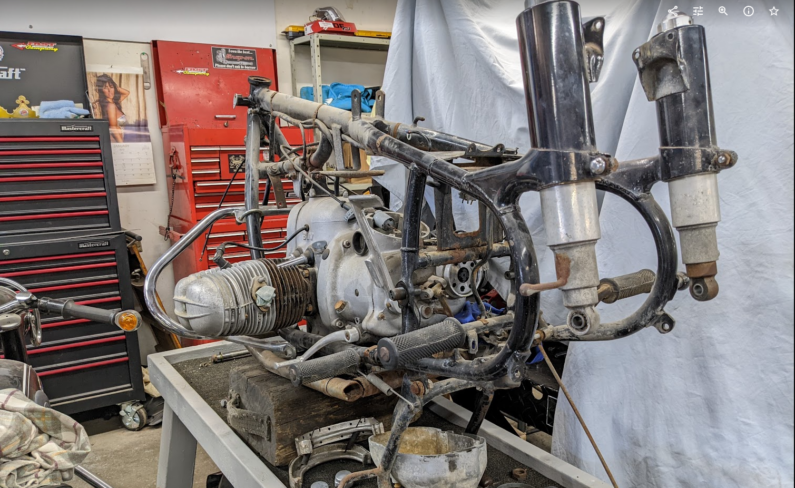

Zen and the Art: Take 2

The lost and found motorcycle reveals its patina-esqe interior, and the parts scrounging.

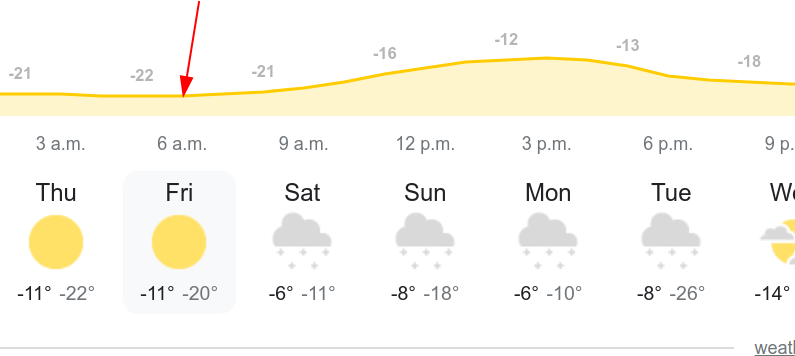

The trials and tribulations of warm hands, #5

OK, this is getting tiring, but… tomorrow will be -22C when I head out for work and some errands. So, no time like the present to groundhog-day-repeat-the-same-warm-hands tricks.

bike #4 arrived, shakedown run

Bike #4 arrives, shakedown run taken.

Time for a new bike?

OK, you may call be a sucker for punishment. But, Yet Another Bike is on the way. The last 3 have each been owned for less than 1 year, so I’m going to work a bit more on that problem.

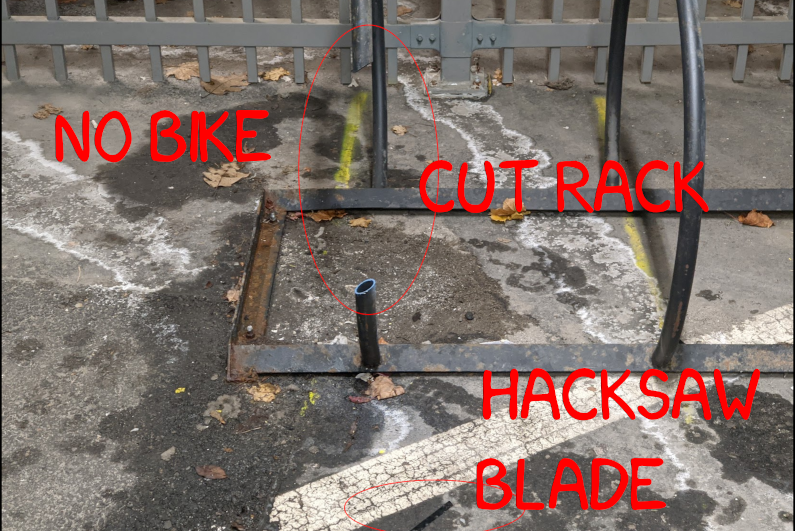

Thieves Strike Again

The Waterloo Regional Police have caller ID. The dispatcher was “I see you had a bike stolen last year, oh, and one other. I can save you some time if the details are the same.” Huh.

Long Strange Trip