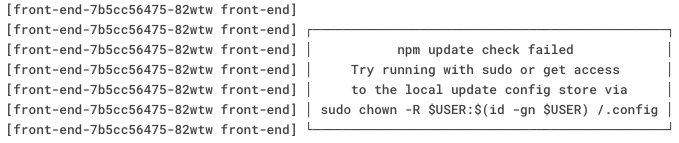

Let’s say one day you are casually browsing the logs of your giant Kubernetes cluster. You spot this log message: “npm update check failed”. Hmm. Fortunately you have an egress firewall enabled, blocking all outbound traffic other than to your well-known API’s, so you know why it failed. You now worry that maybe some of your projects are able to auto-update because it was difficult or impossible to fence them off.

Why would you want a container updating within itself? You rebuild them once a week in your CI, and deploy those tested, scanned updates. Here you are live-importing the risk we talked about in the ESLint debacle, meaning that someone in the universe might have a password they use on more than one site, it gets compromised, and an attacker pushes new code to their repo. And then boom, you’d install it without knowing.

As a backup for your ‘egress firewall’ you have also made all the rootfs read-only in your containers, mitigating the possibility of new installs like this. But, most containers have to have some writeable volume somewhere (even if only for the environment-variable-to-/etc/foo.conf-dance on startup).

Here the solution is a magic, ‘lightly documented’ environment variable NO_UPDATE_NOTIFIER. But…

The code behind this (for nodejs) is here. You can see it would spawn out to the shell. It doesn’t do this check for a while (a day?) after you startup, so you might be lulled into a false sense of security. And it would be hard to construct a generic test to check for this behaviour. So yeah, the best seat-belts are the egress firewall, strict as strict can be, and a read-only rootfs.

Leave a Reply