Tag: devops

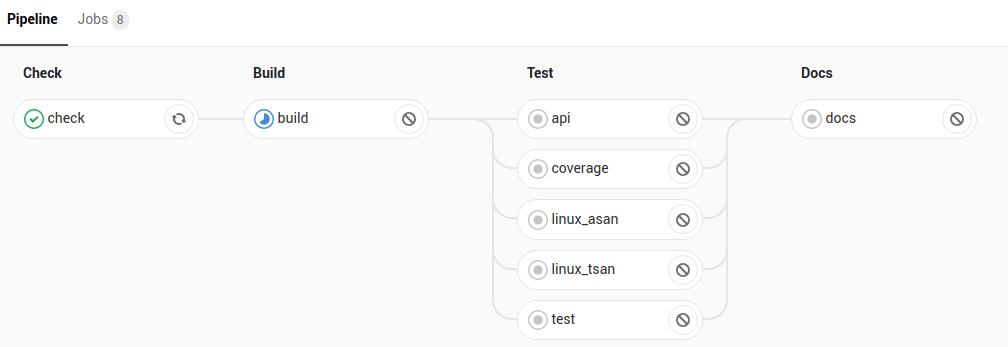

Deleting pipelines in Gitlab 11.6

Gitlab 11.6.0 just released, and one of the new features is deleting a pipeline. Why might you want to delete a little bit of history? Well, perhaps you have inadvertently caused some private information to be printed (a password). Or perhaps you left Auto DevOps enabled and ended up with a whole slew of useless…

When managed software goes bad… A cloud tale

So the other day I wrote of my experience with the first ‘critical’ kubernetes bug, and how the mitigation took down my Google Kubernetes (GKE). In that case, Google pushed an upgrade, and missed something with the Calico migration (Calico had been installed by them as well, nothing had been changed by me). Ooops. Today,…

Suicidal clouds cause consternation

Another day another piece of infrastructure cowardly craps out. Today it was Google GKE. It updated itself to 1.11.3-gke.18, and then had this to say (while nothing was working, all pods were stuck in Creating, and the Nodes would not come online since the CNI failed). 2018-12-03 21:10:57.996 [ERROR][12] migrate.go 884: Unable to store the…

Next Chautauqua: Continuous Integration!

Tomorrow (Tues 27, 2018) we’re going to have the next meetup to talk Continuous Integration. Got a burning desire to rant about the flakiness of an infinite number of shell scripts bundled into a container and shipped to a remote agent that is more or less busy at different hours? Wondering if its better to use…

Separate CI cluster woes: can hierarchical caching help?

So I have this architecture where we have 2 separate Kubernetes clusters. The first cluster runs in GKE, the second on ‘the beast of the basement‘ (and then there’s a bunch in AKS but they are for different purposes). I run Gitlab-runner on these 2 Kubernetes clusters. But… trouble is brewing. You see, runners are…