Tag: continuous

The rabbit-hole of log parsing: istio-proxy sidecar log routing and parsing with fluent-bit

Logging. Its the modern day tower of Babel. So I’m using an EFK (Elasticsearch, Fluent, Kibana) stack for log management. Its a common combination (don’t argue with me that logstash is better, its merely different). And as I’ve wandered down the rabbit hole of trying to get semantic meaning from log messages, I’ve learned a…

My upcoming webinar on security surprises in cloud migration

I’m doing a (guest) webinar for RootSecure on Wednesday Oct 24th @ 11:00 EDT (Toronto) time. You can register if you want to hear a bit about things that might surprise you as you migrate from a safe secure comfy closet to a big airy cloud. For the last years I have been working on cloud, OpenStack,…

When your security tools cost more than the thing they protect

Lets say you have a micro-services app. Its got a bunch of containers that you’ve orchestrated out with Kubernetes. Deployments, Pods, Daemonsets all over the place. Autoscaling. You are happy. Now it comes time to implement that pesky ‘security’ step. You are a bit nervous, there’s no internal firewall, all the services listen on port…

They got in via the logging! remote exploits and ddos using the security logs

So the other day I posted my pride and joy regex. You know, this one? ‘^(?<host>[^ ]*) – \[(?<real_ip>)[^ ]*\] – (?<user>[^ ]*) \[(?<time>[^\]]*)\] “(?<method>\S+)(?: +(?<path>[^\”]*?)(?: +\S*)?)?” (?<code>[^ ]*) (?<size>[^ ]*) “(?<referer>[^\”]*)” “(?<agent>[^\”]*)” (?<request_length>[^ ]*) (?<request_time>[^ ]*) \[(?<proxy_upstream_name>[^ ]*)\] (?<upstream_addr>[^ ]*) (?<upstream_response_length>[^ ]*) (?<upstream_response_time>[^ ]*) (?<upstream_status>[^ ]*) (?<last>[^$]*)’ Seems simple, right? But, it leads to…

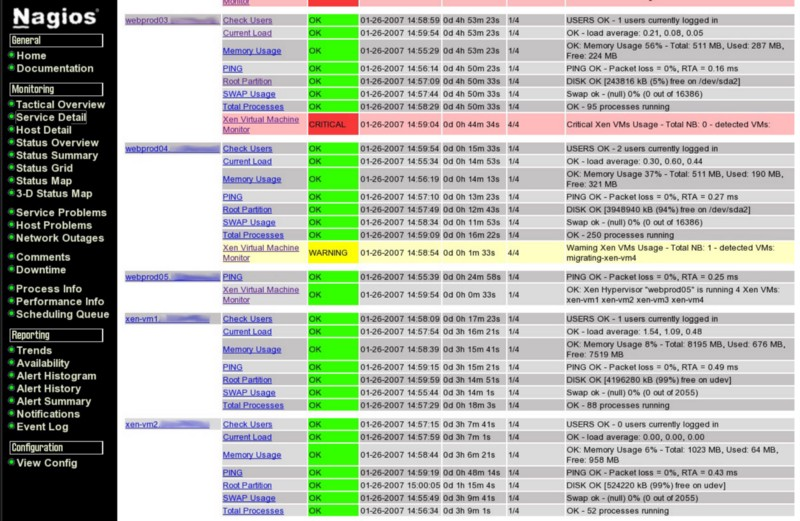

The infinite uncertainty engine: what cloud can learn from telecom

A lot of my background is telecom related. And one of the things telecom is very good at is alarm + fault management. Its an entire industry devoted to making sure that when something goes wrong, the right action happens immediately. That when things trend or fluctuate they get caught. Its complex and a lot…