Shodan adds Canada page!

Woke up this am to a twitter response from @achillean, my wish was granted, we have a Canada dashboard in Shodan now. Sweet. Thanks John! (since you have some time on your hands, have you considered doing an AWS vs Azure vs Google vs Digital Ocean dashboard? 🙂 Now, lets take a look. As you…

I yaml’d my network: netplan and the new world order

[Put on your peril-sensitive sunglasses. If you are expecting a high-level blog post here, this is not for you :] So, systemd. Its eating the world. I joked that soon the kernel would run from systemd, and systemd would replace grub (and it is!). I have a relatively complex home network, and was feeling pretty…

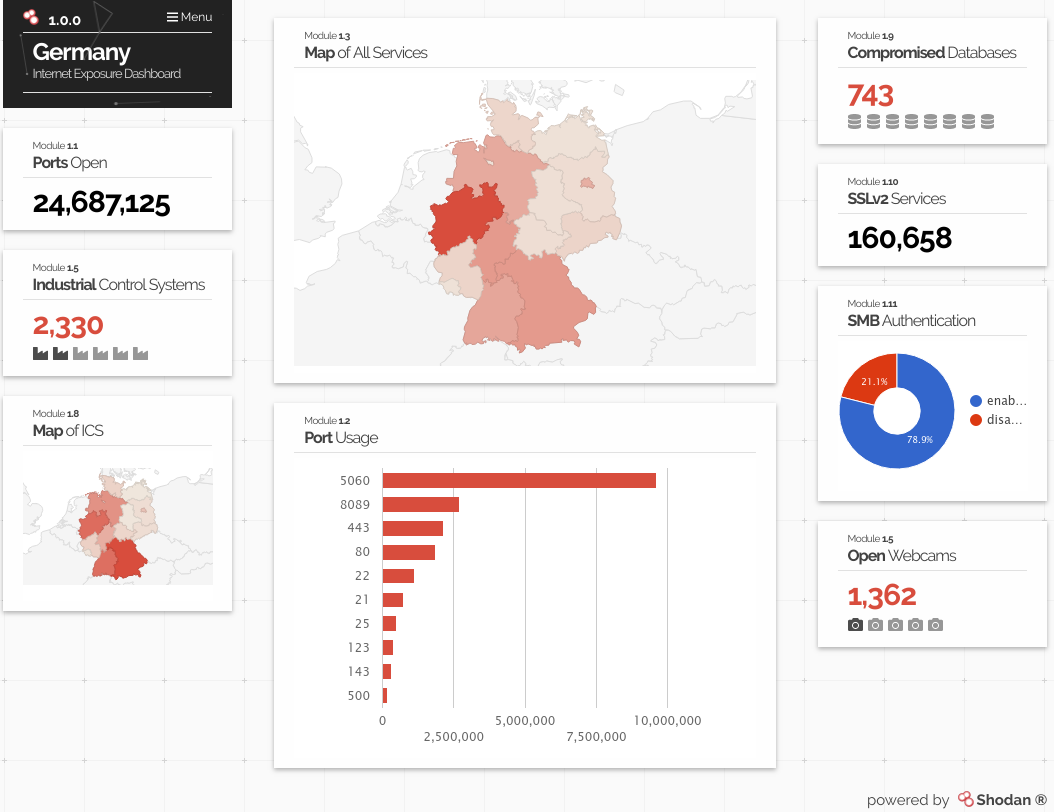

Using shodan to assess a country’s risk: revisited

Earlier I wrote about using Shodan to assess country-specific risks, showing a specific router that the UAE has a lot of. The good folks at Shodan have a country-exposure dashboard. And they’ve just added Switzerland and Germany. They are focusing in on Industrial Control Systems, which is another topic I am fond of. They have…

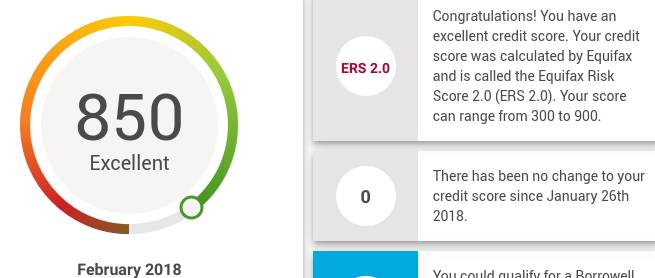

Credit checks as a way to assess risk

Last year there were some pretty unprecedented leaks, including Equifax, the credit agency. The Equifax leak may be one of the worst possible ones, given its broad scope and the information they had available. So you can be forgiven for being somewhat reluctant when I suggest you should be checking your credit score… using Equifax.…

More LoRaWAN RF madness. The Tektelic Kona Pico Gateway

So earlier I wrote about the Dragino, a small & economical Lora gateway. But I was a bit sad that it was a single-channel, technically not compliant (although it does work). I was glad to see the open-source strategy of the Dragino, it was openwrt and the code was all there. Today on yours truly’s…

Long Strange Trip