Tag: agile

Ceph in the city: introducing my local Kubernetes to my ‘big’ Ceph cluster

Ceph has long been a favourite technology of mine. Its a storage mechanism that just scales out forever. Gone are the days of raids and complex sizing / setup. Chuck all your disks into whatever number of servers, and let ceph take care of it. Want more read speed? Let it have more read replicas.…

Downward scaling the cloud

One of the things you will find as you go on your journey through the cloud is that the downward-scalability is very poor. Cloud is designed for a high upfront cost (people time and equipment $$$). But after that, it scales very linearly for a long way. This is great if you are a cog…

Attack of the rack: the killer was in the house!

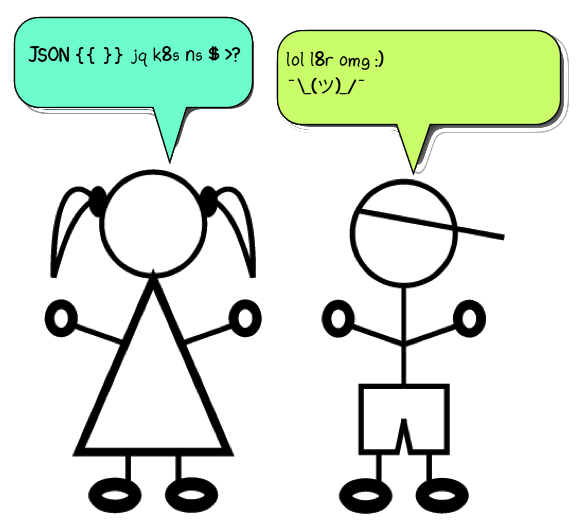

You know the joke about the crappy horror movie, they trace the IP, its 127.0.0.1, the killer was in the house (localhost)? True story, this just happened to me. So settle down and listen to a tale of NAT, Proxy, Kubernetes, and Fail2Ban (AKA Rack Attack in ruby land). You see, we run a modest…

The agony of NFS: the knife twists a bit more

Recently Google announced Filestore. I was all set to rejoice, after my heartbreaks of recent days. After all, it seemed like NFS might have been the answer for me, but I would have to have it run outside of Kubernetes. So it was with great joy I signed up for the beta program, and even…

Kubernetes and private registries and names: your registry credentials everywhere

Its 2018 so you have at least a few private container registries lurking about. And you are using Kubernetes to orchestrate your Highly Available Home Assistant (which you never make an acronym of since people would laugh at you) as well as other experiments. You’ve read the book on namespaces and are all in on…