Tag: agile

Completely Complex Cloud Cluster Capacity Crisis: Cool as a Cucumber in Kubernetes

So…. capacity. There’s never enough. This is why people like cloud computing. You can expand for some extra cash. There’s different ways to expand: scale out (add more of the same) and scale up (make the same things bigger). Normally in cloud you are focused on scale-out, but, well, you need big enough pieces to…

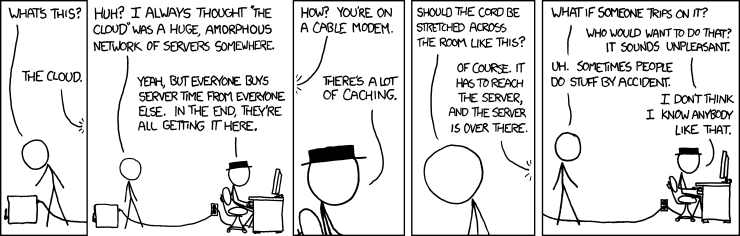

Cloud simplicity: NOT!

(queue wayne’s world music on the ‘NOT!’). So. Gitlab, Gitlab-runner, Kubernetes, Google Cloud Platform, Google Kubernetes Engine, Google Cloud Storage. Helm. Minio. Why? OK, our pipelines use ‘Docker in Docker’ as a means of constructing a docker image while inside a ‘stage’ that is itself a docker image. Why? I don’t want to expose the…

Google adds filestore. But what about the Kubernetes unmount issue?

OK, like all good google products its ‘beta’. But, filestore. This replaces the hackery that people like me have been doing. Except it doesn’t really, its actually kind of the same thing. Its still NFS. The issue is still open, no umount leaves dangling nfs mounts on the host. But, progress. Assuming I can work…

Got a host directory? Wish it were seamlessly mounted in your VM? Want to avoid NFS? 9p!

I wrote earlier about my life-long affair with NFS. 25 years of my life have gone into rpc portmappers and nis etc. But, so many letters in NFS, is there a better way? Enter 9p. This post has a pretty good description. But, in a nutshell, 9p will use virtio transport, so no IP, no…

Cloud billing and bugs: log ingestion

So I’m using Google Cloud Platform (GCP) with Google Kubernetes Engine (GKE). Its not a big deployment (3 instances of 4VCPU/7.5GB RAM), but is now up to about $320/month. And I’m looking at the log ingestion feature. You pay for the bytes, api calls, ingestion, retrieval. See the model here. Feature Price1 Free allotment per…