Everyone agrees that the need for logging has not diminished in the universe. Many tools exist to ingest and normalise logs (splunk, logstash, …). A common set is the ‘EFK’ stack (Elasticseach, Fluentd, Kibana). In this model, fluentd runs listening on some port. Logs are sent there, and it has a set of unbelievably poorly documented parsers that can be run to ‘normalise’ the data before writing to the database.

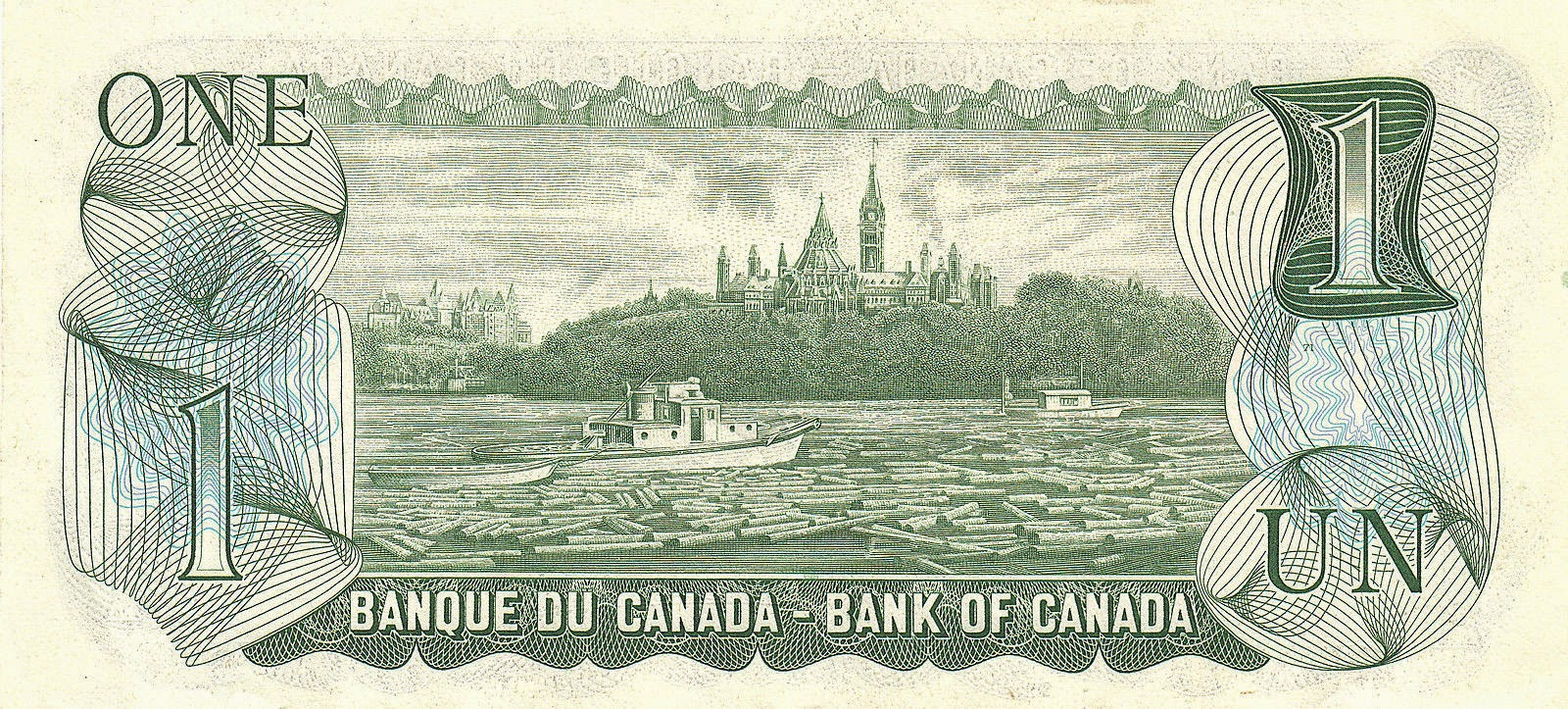

(ps the image…. It appears Canada understood earlier than most that logs were valuable, this is a scene from behind parliament hill).

Elasticsearch is a ‘document’ database. You have an index, a time, and a blob, usually json. It might look like this:

{

"_index": "logstash-2018.08.28",

"_type": "fluentd",

"_id": "qURhgGUBePf7Og9P7DPY",

"_score": 1.0,

"_source": {

"container_name": "/dockercompose_front-end_1",

"source": "stdout",

"log": "::ffff:172.18.0.7 - - [28/Aug/2018:11:53:40 +0000] \"GET /metrics HTTP/1.1\" 200 - \"-\" \"Prometheus/2.3.2\"",

"container_id": "523dc81560bad1f35054720398b6211fa2758648ff4d06225536a63f24696226",

"@timestamp": "2018-08-28T11:53:40.000000000+00:00"

}

},

Now, you’ll note, you get a single line called ‘log’. But, that log looks suspiciously like an apache2-style formatted line. E.g. you really probably wanted it parsed out into hostname, url, response code, etc.

But here comes the dilemma. You have many things in your microservice polyglot applicaton world. Each has a unique and wonderful log format and set of data.

Do you:

- Make a separate output database for each, and then custom ‘schema’?

- Store them all together in a single db, and just make your queries know the format (e.g. the queries do the regex etc to parse the ‘log’)

- Store them all together in a single db, split into the proper output fields, and have the queries know which entries have which fields?

None are great answers.

Worse, the way you have probably done this is with the docker fluentd driver. It means that you have a fluentd server somewhere using @type forward to shovel into Elasticsearch. This in turn means you can’t use the parsers/grok etc, since @type forward is as-is, assumed formatted by the upstream fluentd (in this case, the docker driver, which won’t).

I thought I would get clever, in my node-express frontend, I did:

if(process.env.FLUENT_LOGGER) {

var logger = require('express-fluent-logger');

app.use(logger('tagName', { host: process.env.FLUENT_LOGGER, port: 24224, timeout: 3.0, responseHeaders: ['x-userid'] }));

}

but while I had perfectly formatted logs, I ended up with a unique format (none of the container-name/source fields from above) for this ‘type’.

If I did:

logging = require("express-logging")

logger = require("logops")

app.use(logging(logger));

Things got weirder. Now I have a beautifully formatted JSON object, butchered with quote-escapes, jammed into a string called log.

So, gentle reader, how do you recommend a microservice app with ~10 pieces, all polyglot, each doing a different role, log their access logs etc in such a way that its useable?

Leave a Reply